2 Introduction

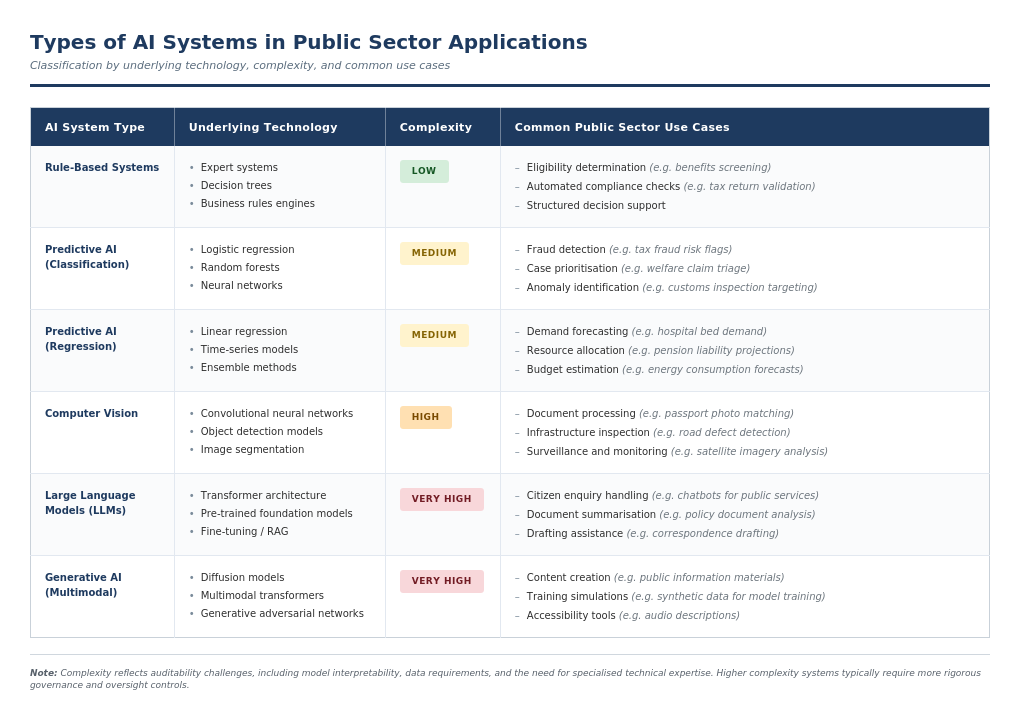

Figure 2.1: Types of AI systems in public sector applications

AI is developing rapidly and is increasingly used in the public sector. Public sector bodies are adopting AI to improve efficiency, deliver better services, and reduce costs.

AI systems are human-made technologies designed to perform tasks that typically require intelligence. These systems learn from data and adapt to achieve specific goals, often improving performance without explicit reprogramming. AI encompasses fields such as machine learning, natural language processing, computer vision, speech recognition, planning, and robotics. It enables capabilities like perception, reasoning, decision-making, and problem-solving. Most AI systems rely on machine learning, where models learn patterns from data to achieve specific goals.

Predictive AI systems use historical data to identify patterns and make predictions about new cases. For example, they can estimate the likelihood of illness based on health records or flag potential errors in tax returns. These systems are commonly used for classification (such as sorting cases into categories) or regression (such as predicting numerical values).

Generative AI systems are designed to create new content (such as text, images, video, or audio) by learning from large datasets. They can produce realistic outputs, often with little or no human guidance.

Foundation models or general-purpose AI models are trained on very large and diverse datasets. They use deep learning techniques and can be adapted for a wide range of applications, from image recognition to scientific research. Large Language Models (LLMs) are a key type of foundation model, trained mainly on text. They support tasks such as translation, summarisation, and conversation by generating human-like language.

The use of AI in public services brings new opportunities but also introduces challenges. These include protecting personal data, ensuring decisions are fair and explainable, and avoiding bias or discrimination. Poorly designed or managed AI systems can increase workloads, cause delays, or undermine public trust.

To address these risks, both internal controls and external audits are essential. International and national bodies have developed principles and guidelines to support the responsible use of AI.5 As AI-specific legislation emerges, new regulatory and oversight bodies are being established. For example, the EU AI Act introduces dedicated authorities to enforce compliance and support auditability. These include notified bodies6 and market surveillance authorities.7 These entities are granted extensive access rights to the AI system’s technical documentation or underlying components, including data and source code. AI oversight frameworks may result in the establishment of standards and certifications, making AI audits easier.

Supreme Audit Institutions (SAIs) play a key role in holding government to account, and this can include ensuring safe and ethical use of AI. While auditing AI systems can be challenging, it is increasingly necessary as these technologies become more widespread in the public sector. Certification for specialised, licensed AI auditors is being developed.8

This paper offers guidance for SAIs on auditing AI systems in government. It highlights key risks and outlines mitigations and controls that an auditor should expect to see. AI audits may include both performance and compliance elements. This is not a prescriptive set of criteria and should be used as a guide for developing or adapting auditing methodologies.

AI systems are rarely stand-alone; they are usually embedded within wider IT processes. Auditors should always assess the risks associated with the broader IT environment, which may lead to a wider IT audit. This may require expertise from AI or IT specialists. This guide focuses specifically on the audit of the AI component.

Recommendations in this paper draw on published research and the experience of the authoring SAIs, including audits of AI systems and other software development projects. It reflects the AI audit experience in the authoring SAIs at the time of writing9, and may be updated with more audit experience and the results of new research where appropriate.

Chapter 3 sets out possible audit criteria, covering national regulations, international standards, and widely used guidelines. Chapter 4 presents an audit catalogue10, structured around key areas: project management and governance, data, system development, evaluation before deployment including ethical considerations such as explainability and fairness, deployment and change management, and AI systems in production. Each area includes an overview, identifies AI-specific risks, and suggests controls.

This paper is supplied with appendices containing additional information:

- Appendix 1: Classic IT audit components in AI context summarises IT audit components relevant to AI.

- Appendix 2: Personal data and GDPR in the context of AI includes risks related to personal data and violation of GDPR.

- Appendix 3: Equality and fairness measures in classification systems gives an overview of the most relevant equality and fairness measures for classification applications.

- Appendix 4: Glossary contains a glossary with a focus on the technical terms used in this paper, as well as a list of abbreviations used in this guide.

- Appendix 5: Roles contains a summary of roles in the audited body that may be relevant in the audit of AI systems.

A separate audit helper tool is also available. This tool offers a range of audit questions, mapped to each stage of the AI lifecycle, and helps auditors identify suitable audit evidence.

Preparation of visuals in this guide as well as consolidation of guide and helper tool was in parts supported by AI tools, with thorough verification by the authors.We list them and other possible sources for audit criteria in section 3 of this paper.↩︎

EU AI Act, Annex VII; Article 3 (22) A ‘notified body’ is an organisation officially approved under EU law to check whether certain products or systems meet the required standards.↩︎

EU AI Act, Article 74; Article 3 (26) A ‘market surveillance authority’ is the national agency responsible for monitoring products on the market and enforcing compliance with EU rules.↩︎

For example, ISACA’s AI certification.↩︎

Last update: December 2025.↩︎

By audit catalogue we mean a set of guidelines including both the suggested content of audit topics based on risks, as well as methodology to perform respective audit tests.↩︎