3.1 Legal framework for AI applications

Different countries are introducing regulations to guide how AI is developed and used. Auditors need to understand the legal landscape to assess whether public sector organisations are compliant and are using AI safely and ethically.

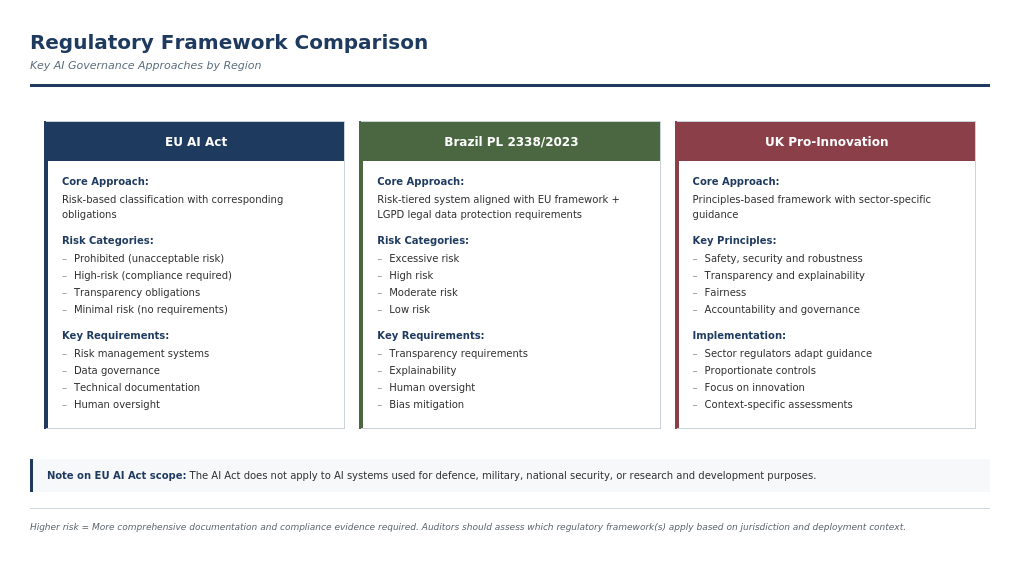

Figure 3.1: Comparison of the regulatory frameworks outlined in this guide.

3.1.1 European Union: The EU AI Act

The EU AI Act11 is the first comprehensive legislation to regulate AI in the European Union. It aims to ensure that AI systems placed on the EU market are safe and respect fundamental rights. The Act takes a product safety approach, similar to rules for other high-risk technologies.

3.1.1.1 Scope of the AI Act

The Act defines AI broadly but excludes simple rule-based expert systems.12 It explicitly states that AI systems infer how to generate an output from the inputs it receives.13 Organisations can have different roles under the Act: They may be “providers” if they develop or commission AI systems, or “deployers” if they use AI systems in their operations.

The Act applies to most AI systems offered or used in the EU, but there are some exceptions.14 For example, it does not cover systems used only for national security, defence, or scientific research.

3.1.1.2 Risk-based approach

The Act uses a risk-based framework. It classifies AI systems into four categories.15 Figure 3.1 provides an overview of the different categories of AI systems in the AI Act.

- Prohibited AI: Some uses are banned outright, such as social scoring, predictive profiling, and emotion recognition in workplaces or schools.16

- High-risk AI: These systems include for example those used in law enforcement, critical infrastructure (including medical devices), and access to essential services. Most of the Act’s requirements focus on these systems.

- AI with transparency obligations: Systems that interact directly with people must be clearly labelled as AI, regardless of risk.

- Other AI systems: Most other AI systems are not subject to specific rules but must still comply with general EU laws.

3.1.1.3 Requirements

For high-risk AI systems, providers must17:

- Assess and document risks.

- Keep detailed system records and logs.

- Ensure human oversight.

- Meet standards for data quality, robustness, cybersecurity, and accuracy.

- Register high-risk systems in an EU-wide database.

The European Commission, together with Member States, will establish and maintain a database for high-risk systems. It will include descriptions of the application, data, and functionality of AI systems, as well as user manuals. Systems that are not classified as high-risk solely due to an exemption must also be registered here.

For all systems regardless of risk classification:

- If AI systems interact directly with individuals, the systems and their outputs must be labelled as AI or AI-generated.18

- AI literacy must be ensured. Providers and deployers must take measures to ensure that users possess sufficient competence in using AI systems.

The EU AI Act encourages voluntary codes of conduct that apply the regulation of high-risk systems to other systems.

3.1.1.4 General-Purpose AI models

The Act introduces special rules for general-purpose AI models.

Particularly powerful general-purpose AI models are classified as systems with systemic risk. This classification is based on the model’s impact capability. A model is presumed to have systemic risk if it was trained using more than 10²⁵ floating point operations (FLOPs).

It is important to distinguish between a general-purpose AI model (such as GPT-4) and a system built on top of such a model (such as ChatGPT). The regulation on general purpose AI mainly applies to the model itself.19 General-purpose AI models are not classified as AI systems under the Act, so they are not subject to rules for prohibited or high-risk systems. However, if a general-purpose model is used as part of a high-risk AI system, the provider and user of that system must ensure it meets all relevant requirements. Providers of general-purpose AI models must supply information and documentation about the model.20 This is to help users understand the model’s capabilities and limitations, and to support compliance with the Act.21

3.1.1.5 Implementation of the AI Act

The EU AI Act entered into force in August 2024. Its requirements will be phased in over several years. For AI systems that were already in operation, relevant requirements may only apply partially or with delay.

The AI Act will be implemented by the EEA EFTA states (Norway, Liechtenstein and Iceland) with some delay.

3.1.2 UK legislation and guidance

The UK relies on existing sector-specific laws and regulators to govern the development and use of AI in the public sector, in place of horizontal AI-specific legislation. In 2023, the government published a white paper A pro-innovation approach to AI regulation22 outlining the principles for regulators. This paper outlines ‘Five Principles’ for developing and regulating AI. These are safety, security and robustness; appropriate transparency and explainability; fairness; accountability and governance; and contestability and redress. The principles describe what AI systems deployed in the public sector must achieve, as well as how to achieve these outcomes in practice. Auditors can use this guidance to understand what best practice might look like when deploying AI systems.

Existing authorities are tasked with applying these principles within their respective sectors, developing regulatory guidance and oversight for the deployment of technology. For example, the Information Commissioner’s Office (ICO) has updated the Data (Use and Access) Act to expand on the circumstances in which an organisation can automate decision-making based on automated processing of personal information.23

The UK government has provided principles and guidance for the public sector. For example, the AI Playbook for the UK Government provides guidance for using AI safely, effectively and securely for employees of the civil service and government organisations. It outlines ten principles for AI use in the government and public sector. Auditors can use this guidance to inform best practice for purchasing and deploying AI systems.24 Additionally, the Algorithmic Transparency Recording Standard (ATRS) is now mandatory for central government departments.25 The ATRS establishes a standardised method for public sector organisations to publish information about how and why they use algorithmic tools.

The UK government has also expanded existing and related guidance to incorporate recommendations for AI systems. For example:

- The AQuA Book provides guidance on producing robust analysis aligned with business needs. It outlines factors for determining appropriate assurance, including guidance specific to the assurance of AI systems.26 These factors include business criticality; novelty of approach; level of precision required in outputs; amount of resource available to carry out the assurance; and effects on the public. Additionally, the paper includes considerations for the design of AI models, including risk assessment, impact assessment, bias audits and compliance audits.

- The Magenta Book provides guidance on evaluating the impact of AI interventions.27

- The National Cyber Security Centre sets out principles for securing machine learning systems.28

- Guidelines for AI procurement are also available, outlining best practice for acquiring AI technologies in government.29

Additionally, there is existing guidance for the approval and assurance of AI:

- The UK government’s Introduction to AI assurance provides an overview of key AI assurance concepts. Technical detail of AI assurance is not included, but it is a useful source for background information.30

- The Financial Reporting Council’s AI in Audit outlines use cases for AI in audit alongside documentation guidance for AI tools.31

- The UK government’s guidance on digital and technology spend control covers guidance for AI procurement in the Risk and Importance framework.32

3.1.3 Brazil legislation and guidance

Brazil is developing a legal framework to regulate AI. Senate Bill No. 2338 of 202333 sets out rules for the development and use of AI across the country. The bill covers a wide range of areas, including economic development, science and technology, civil law, and individual rights.

The Senate has approved the bill, and it is now under review by the Chamber of Deputies. Lawmakers have proposed several amendments. These include requirements for labelling AI-generated content, promoting research and training, increasing penalties for defamation using AI, and protecting children and adolescents. Other amendments address the development and use of AI, changes to Brazil’s General Data Protection Law (LGPD), public participation in regulation, and legal obligations for AI developers. The bill also covers copyright, intellectual property, labour rights, and freedom of expression. The bill’s scope is broad. It addresses responsibility, safety, trust, respect for human rights, democracy, environmental protection, sustainable development, risk classification, governance, impact assessment, civil liability, and penalties.

Brazil’s Federal Court of Accounts (TCU) has also published practical guidance for the ethical use of AI. In July 2024, TCU released a guide on using generative AI. The guide is aimed at all staff, contractors, and interns. Its goal is to support innovation and productivity while ensuring data security, privacy, and reliability.34

The TCU guide sets out clear rules:

- Approved AI solutions: Only certain AI tools are approved for use with confidential information. Tools not approved by TCU, such as ChatGPT and Gemini, can only be used with public data and under strict conditions.

- User accountability: Staff remain fully responsible for any content produced with AI, even if errors are introduced by the tool.

- Human oversight: All automated decisions must be reviewed by a person, especially for strategic or public-facing information.

- Content validation: AI-generated content must be checked for accuracy, fairness, and appropriateness. Staff should not use AI if they cannot validate the output.

- Security and compliance: Use of AI must comply with TCU’s information security policy. Non-compliance can lead to disciplinary action or contract breaches.

- Intellectual property: Staff must not use AI-generated content that may infringe copyright and should identify AI-generated material when needed.

- Development of new solutions: New generative AI tools require approval from the IT unit and management committee. Only the IT executive unit may develop AI applications for external audiences.

The guide also highlights the risks of bias in AI. It distinguishes between model bias (from training data) and automation bias (the human tendency to trust automated suggestions). The guide stresses that all use of AI must follow TCU’s code of conduct and non-discrimination policies.

Regulation (EU) 2024/1689 of the European Parliament and of the Council of 13 June 2024.↩︎

This is explicitly stated in Recital 12 of the EU AI Act.↩︎

The European Commission published guidelines on AI system definition.↩︎

EU AI Act, Art. 2.↩︎

The European Commission provides a compliance checker. Auditors can use this tool to estimate which AI Act provisions apply to a given AI system.↩︎

The European Commission published guidelines on prohibited artificial intelligence.↩︎

See EU AI Act, Chapter III for more details. The European Commission will publish guidelines on high-risk AI systems (to be published as of December 2025).↩︎

The EU commission will publish guidelines on these transparency obligations (to be published as of December 2025).↩︎

For further context, see S. Wachter, Limitations and Loopholes in the EU AI Act and AI Liability Directives: What This Means for the European Union, the United States, and Beyond.↩︎

The European Commission published a code of practice on general-purpose AI models.↩︎

According to EU AI Act, Art 53 2 these obligations do not apply to providers of GPAI models that are published under open-source or free licenses and do not pose systemic risks.↩︎

For more information, see A pro-innovation approach to AI regulation - GOV.UK.↩︎

For more information, see Data protection | ICO.↩︎

The playbook can be found here, AI Playbook for the UK Government - GOV.UK.↩︎

For further information, see Algorithmic Transparency Recording Standard Hub - GOV.UK.↩︎

For more information, see 7 Design – The AQuA Book.↩︎

For more information, see Guidance on the Impact Evaluation of AI Interventions (HTML) - GOV.UK.↩︎

For more information, see Principles for security of Machine learning ML - NCSC.GOV.UK.↩︎

For more information, see Guidelines for AI procurement - GOV.UK.↩︎

For more information, see Introduction to AI assurance - GOV.UK.↩︎

For more information, see AI in Audit.↩︎

For more information, see Risk and Importance Framework - GOV.UK.↩︎

For more information, see Guia de uso de Inteligência Artificial no Tribunal de Contas da União (TCU):.↩︎